Server computers are the unsung heroes of the digital age, silently powering the websites, applications, and data that we rely on every day. From the massive data centers that store our information to the small servers running our home networks, these machines play a crucial role in connecting us to the world.

Server computers are specialized computers designed to provide services to other computers, known as clients. Unlike personal computers, which are primarily used for individual tasks, servers are designed to handle multiple requests simultaneously, making them ideal for tasks like hosting websites, storing files, and managing databases.

Server Computer Basics

Servers are powerful computers designed to provide resources and services to other computers, known as clients, over a network. They are the backbone of modern computing, enabling us to access information, share files, and utilize online services.

Server and Client Computers

Servers and client computers are distinct types of computing devices with specific roles in a network.

Servers are dedicated to providing resources and services to other computers. They typically have powerful processors, ample memory, and extensive storage capacity to handle the demands of multiple clients simultaneously.

Client computers, on the other hand, are designed to access and utilize the resources and services provided by servers. They are generally less powerful than servers and focus on user interaction and application execution.

Types of Servers

Servers are categorized based on the specific services they provide. Here are some common types:

- Web Server: Delivers web pages and other content to users browsing the internet. Examples include Apache, Nginx, and Microsoft IIS.

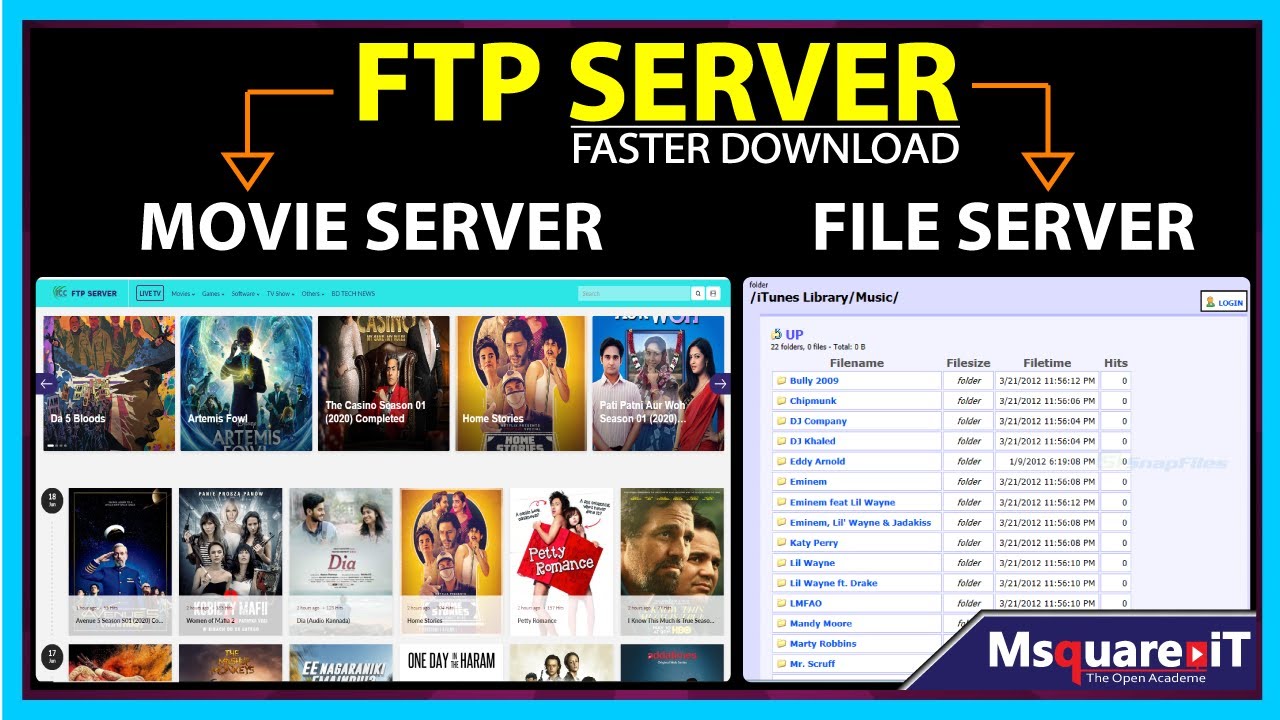

- File Server: Stores and manages files, making them accessible to authorized users on the network. Examples include Windows Server, Linux File Server, and NAS (Network Attached Storage) devices.

- Database Server: Stores and manages large amounts of data, providing access and manipulation capabilities for applications. Examples include MySQL, PostgreSQL, and Oracle Database Server.

- Mail Server: Handles email communication, sending and receiving emails between users. Examples include Postfix, Sendmail, and Microsoft Exchange Server.

- Print Server: Manages print jobs and allows users to print documents from their client computers. Examples include Windows Print Server and CUPS (Common UNIX Printing System).

Server Hardware Components

Servers, the backbone of modern technology, rely on specialized hardware components to function efficiently. Understanding these components is crucial for optimizing server performance and ensuring smooth operation.

Processor (CPU)

The processor, or Central Processing Unit (CPU), is the brain of the server. It executes instructions and processes data, directly impacting server speed and performance. Servers often employ powerful multi-core CPUs with high clock speeds to handle complex tasks and multiple simultaneous requests.

Memory (RAM)

Random Access Memory (RAM) serves as the server’s short-term memory. It stores data that the CPU needs to access quickly, enabling faster processing and reducing the time required to retrieve information. Servers typically require large amounts of RAM to handle numerous applications and users simultaneously.

Storage (HDD, SSD)

Storage devices, such as Hard Disk Drives (HDDs) and Solid State Drives (SSDs), provide long-term storage for server data. HDDs are cost-effective and offer high storage capacities, while SSDs deliver significantly faster data access speeds, crucial for applications demanding rapid data retrieval. Servers often utilize a combination of both HDDs and SSDs to balance cost, performance, and storage needs.

Network Interface Card (NIC)

The Network Interface Card (NIC) acts as the bridge between the server and the network. It enables the server to connect to other devices, communicate with users, and transmit data across the network. High-speed NICs are essential for servers handling large volumes of network traffic, ensuring efficient data transfer and seamless connectivity.

Server Operating Systems

A server operating system (OS) is the software that manages the hardware and software resources of a server. It provides a platform for running applications and services that make up the server’s functionality. Choosing the right server operating system is crucial for optimizing performance, security, and compatibility with specific applications.

Comparison of Server Operating Systems

The choice of server operating system depends on various factors, including the specific applications to be hosted, the required security level, and the available budget. Here is a comparison of three popular server operating systems: Windows Server, Linux, and macOS Server.

- Windows Server: This operating system is known for its user-friendly interface, strong integration with Microsoft products, and robust security features. It is widely used in corporate environments for applications like Active Directory, Exchange Server, and SQL Server.

- Linux: This open-source operating system is known for its flexibility, stability, and cost-effectiveness. It offers a wide range of distributions, each with its own set of features and capabilities. Linux is popular for web servers, databases, and high-performance computing applications.

- macOS Server: This operating system is primarily used for Apple devices and applications. It offers a user-friendly interface, strong security features, and seamless integration with other Apple products. macOS Server is suitable for small businesses and organizations that need to manage Apple devices and applications.

Advantages and Disadvantages of Server Operating Systems

Each server operating system has its own set of advantages and disadvantages, which can influence the decision-making process.

Windows Server

- Advantages:

- User-friendly interface and ease of management.

- Strong integration with Microsoft products, such as Office 365 and Azure.

- Robust security features and comprehensive support from Microsoft.

- Widely used in corporate environments, making it easy to find skilled professionals.

- Disadvantages:

- Higher licensing costs compared to Linux.

- Can be resource-intensive, requiring more powerful hardware.

- Vulnerable to specific security threats, requiring regular updates and patches.

Linux

- Advantages:

- Open-source, making it free to use and distribute.

- Highly customizable and flexible, allowing for tailoring to specific needs.

- Known for its stability and reliability, with a large community of developers and users.

- Cost-effective, reducing licensing and maintenance costs.

- Disadvantages:

- Steeper learning curve compared to Windows Server.

- May require more technical expertise for management and troubleshooting.

- Limited support from commercial vendors, relying on community support.

macOS Server

- Advantages:

- User-friendly interface and seamless integration with Apple devices and applications.

- Strong security features and regular updates from Apple.

- Suitable for managing Apple devices and applications in small businesses and organizations.

- Disadvantages:

- Limited compatibility with non-Apple devices and applications.

- Higher licensing costs compared to Linux.

- Smaller community support compared to Windows Server and Linux.

Popular Server Distributions

Each server operating system offers various distributions, each tailored to specific needs and applications.

Windows Server Distributions

- Windows Server 2022: The latest version of Windows Server, offering enhanced security features, improved performance, and support for modern technologies like containers and microservices.

- Windows Server 2019: A stable and reliable version of Windows Server, widely used in corporate environments for its robust security features and compatibility with existing applications.

- Windows Server 2016: A popular version of Windows Server, offering a balance of features, security, and performance.

Linux Distributions

- Ubuntu Server: A popular and widely used Linux distribution, known for its ease of use, comprehensive package management system, and strong community support.

- CentOS: A stable and reliable Linux distribution, favored for its long-term support and compatibility with Red Hat Enterprise Linux (RHEL).

- Debian: A stable and secure Linux distribution, known for its strong package management system and its focus on security.

- Red Hat Enterprise Linux (RHEL): A commercial Linux distribution, known for its enterprise-grade stability, security, and support.

- SUSE Linux Enterprise Server (SLES): Another commercial Linux distribution, known for its stability, security, and support for mission-critical applications.

macOS Server Distributions

- macOS Server 13 Ventura: The latest version of macOS Server, offering improved security features, enhanced performance, and support for modern technologies.

- macOS Server 12 Monterey: A stable and reliable version of macOS Server, offering a wide range of features and capabilities for managing Apple devices and applications.

- macOS Server 11 Big Sur: A popular version of macOS Server, offering a balance of features, security, and performance.

Server Virtualization

Server virtualization is a technology that allows multiple operating systems and applications to run concurrently on a single physical server. This is achieved by creating virtual machines (VMs) on the physical server, each with its own operating system and resources.

Virtualization offers numerous benefits, including increased server utilization, reduced hardware costs, improved flexibility, and enhanced disaster recovery capabilities.

Virtualization Technologies

Virtualization technologies are software platforms that enable the creation and management of virtual machines. These platforms provide the necessary tools to allocate resources, monitor performance, and manage virtualized environments.

Here are some popular virtualization technologies:

- VMware vSphere: A comprehensive virtualization platform that offers a wide range of features, including server virtualization, storage management, and network management. VMware vSphere is widely used in enterprise environments.

- Microsoft Hyper-V: A virtualization platform developed by Microsoft. Hyper-V is integrated into Windows Server operating systems and provides a robust solution for server virtualization.

- KVM (Kernel-based Virtual Machine): An open-source virtualization platform that is built into the Linux kernel. KVM is a popular choice for organizations looking for a free and flexible virtualization solution.

Optimizing Server Resources and Reducing Costs

Virtualization enables organizations to optimize server resources and reduce costs in several ways:

- Consolidation: Virtualization allows multiple applications and workloads to run on a single physical server, reducing the need for multiple physical servers. This leads to lower hardware costs, reduced power consumption, and less space required for server infrastructure.

- Resource Allocation: Virtualization platforms provide granular control over resource allocation, allowing administrators to assign specific amounts of CPU, memory, and storage to each virtual machine. This ensures that resources are used efficiently and prevents over-provisioning.

- Disaster Recovery: Virtualization simplifies disaster recovery processes. VMs can be easily migrated to different physical servers or even to cloud environments in case of a disaster. This ensures business continuity and reduces downtime.

“Virtualization can significantly reduce server costs by consolidating workloads and optimizing resource utilization.”

Examples of Virtualization in Action

- A small business with limited IT resources can use virtualization to consolidate its servers, reducing the need for multiple physical machines and the associated maintenance costs. This can free up valuable IT staff time for other tasks.

- A large enterprise with a complex IT infrastructure can use virtualization to create a highly available and scalable environment. VMs can be easily moved between servers to balance workloads and ensure high performance.

- A web hosting provider can use virtualization to create shared hosting environments. This allows them to host multiple websites on a single server, reducing hardware costs and increasing server utilization.

Server Security

Servers are essential components of modern computing infrastructure, supporting various services and applications. However, they are also vulnerable to security threats that can compromise data, disrupt operations, and inflict significant financial damage. Implementing robust security measures is crucial to protect servers from these threats and ensure the integrity and availability of critical data and services.

Common Security Threats

Servers face a wide range of security threats, each requiring specific mitigation strategies. Understanding these threats is the first step in developing a comprehensive security plan.

- Malware: Malicious software, such as viruses, worms, and ransomware, can infect servers and compromise data, steal sensitive information, or disrupt operations.

- Denial-of-service (DoS) Attacks: These attacks aim to overwhelm a server with traffic, making it unavailable to legitimate users.

- Unauthorized Access: Unauthorized individuals may attempt to gain access to servers through brute-force attacks, exploiting vulnerabilities, or social engineering techniques.

- Data Breaches: Data breaches can result from malicious attacks, accidental data disclosure, or weak security practices, exposing sensitive information to unauthorized parties.

Mitigation Strategies

Several mitigation strategies can be employed to protect servers from these threats:

- Firewalls: Firewalls act as a barrier between a server and external networks, blocking unauthorized access and filtering incoming and outgoing traffic. They analyze network traffic and allow only authorized connections to pass through, preventing malicious actors from reaching the server.

- Intrusion Detection Systems (IDS): IDSs monitor network traffic for suspicious activities, such as unauthorized access attempts, malware infections, or DoS attacks. They alert administrators about potential threats, allowing them to take immediate action to mitigate risks.

- Anti-malware Software: Anti-malware software scans servers for known malware and prevents new infections. It also identifies and removes existing malware, minimizing the impact of infections and protecting sensitive data.

- Regular Security Updates: Software vendors regularly release security patches to address vulnerabilities in their products. Applying these updates promptly is crucial to protect servers from known exploits.

- Strong Passwords and Access Control: Implementing strong passwords and multi-factor authentication for server access can significantly reduce the risk of unauthorized access. Restricting access to specific users and roles based on their responsibilities helps ensure that only authorized personnel can access sensitive data.

- Data Encryption: Encrypting sensitive data at rest and in transit protects it from unauthorized access even if the server is compromised. Encryption algorithms transform data into an unreadable format, making it incomprehensible to unauthorized individuals.

- Regular Security Audits: Regular security audits assess the effectiveness of existing security measures and identify potential vulnerabilities. These audits help organizations proactively address security risks and ensure that their servers remain protected.

Best Practices for Securing Server Access and Data

Securing server access and data is critical to protecting sensitive information and maintaining operational integrity. Here are some best practices:

- Limit Server Access: Grant access to servers only to authorized personnel who need it for their job responsibilities. This principle of least privilege reduces the risk of unauthorized access and data breaches.

- Implement Strong Authentication: Use multi-factor authentication (MFA) to require users to provide multiple forms of identification before granting access to servers. This significantly increases the security of server access, making it more difficult for unauthorized individuals to gain access.

- Regularly Monitor Server Activity: Monitoring server activity helps identify suspicious behavior, such as unusual login attempts, data access patterns, or unexpected changes in system configuration.

- Regularly Backup Data: Regular data backups are essential to recover data in case of a security breach or system failure. Ensure that backups are stored securely and regularly tested to verify their integrity.

- Educate Users: Educate users about common security threats and best practices for securing their accounts and devices. This includes raising awareness about phishing attacks, malware infections, and social engineering techniques.

Server Management

Keeping servers running smoothly and efficiently is crucial for any organization. Server management involves a range of tasks, from routine maintenance to complex troubleshooting. Effective server management ensures optimal performance, security, and reliability.

Server Management Tools and Techniques

Server management tools and techniques are essential for system administrators to effectively manage server infrastructure. These tools automate tasks, provide insights into server health, and streamline the management process.

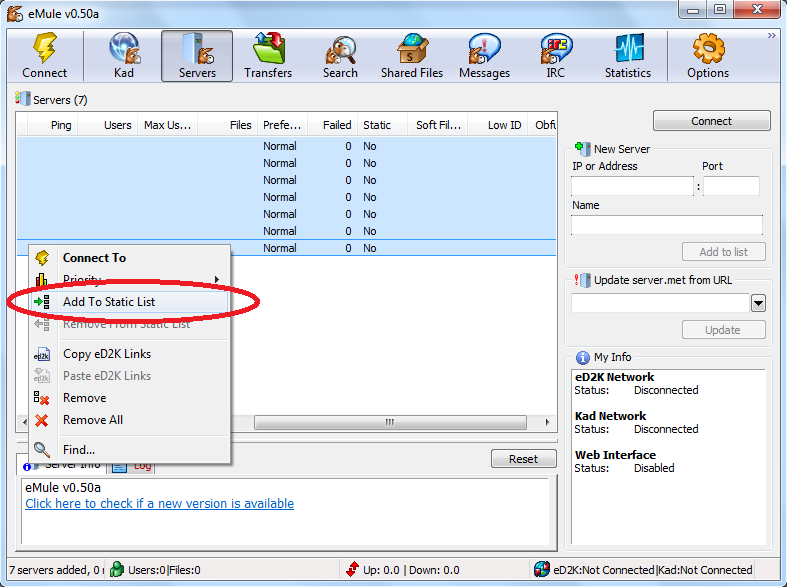

- Remote Access Tools: Tools like SSH (Secure Shell) and RDP (Remote Desktop Protocol) enable administrators to connect to servers remotely, providing secure access for management tasks.

- Command-Line Interfaces (CLIs): CLIs offer a powerful way to interact with servers, allowing for precise control over system configurations and processes.

- Graphical User Interfaces (GUIs): GUIs provide a user-friendly interface for managing servers, simplifying common tasks and offering visual representations of server data.

- Configuration Management Tools: Tools like Puppet, Chef, and Ansible automate configuration management, ensuring consistency and reducing errors in server setups.

- Monitoring Tools: Monitoring tools provide real-time insights into server performance, resource utilization, and potential issues. Examples include Nagios, Zabbix, and Prometheus.

- Log Management Tools: Tools like Splunk and Graylog collect and analyze server logs, providing valuable information for troubleshooting and security investigations.

Role of System Administrators

System administrators play a vital role in server maintenance and troubleshooting. Their responsibilities include:

- Installing and Configuring Servers: Setting up new servers, configuring operating systems, and installing necessary software.

- Monitoring Server Performance: Regularly monitoring server performance metrics, identifying bottlenecks, and optimizing resource utilization.

- Troubleshooting Server Issues: Diagnosing and resolving server problems, including hardware failures, software errors, and security breaches.

- Implementing Security Measures: Implementing and maintaining security protocols, such as firewalls, intrusion detection systems, and access controls.

- Performing Regular Maintenance: Updating software, patching vulnerabilities, and performing routine maintenance tasks to ensure server stability.

- Backup and Recovery: Implementing backup strategies and ensuring data recovery procedures are in place to minimize data loss in case of emergencies.

Server Monitoring Tools

Monitoring tools provide critical insights into server performance and resource utilization. These tools help system administrators identify potential issues before they impact server stability and availability.

- Nagios: A popular open-source monitoring tool that provides real-time alerts for server issues, including hardware failures, software errors, and network problems.

- Zabbix: Another open-source monitoring tool that offers comprehensive monitoring capabilities, including server performance, network traffic, and application health.

- Prometheus: A modern monitoring system designed for scalability and flexibility. It provides powerful metrics collection and analysis capabilities, allowing for detailed insights into server performance.

- Datadog: A cloud-based monitoring platform that offers a wide range of monitoring tools, including server performance, application health, and infrastructure monitoring.

- New Relic: A popular application performance monitoring (APM) tool that provides insights into application performance, code-level metrics, and user experience.

Server Applications

Servers are powerful computers that provide services to other computers, known as clients. These services can range from simple tasks like hosting websites to complex operations like managing databases or facilitating communication. Server applications are the software programs that enable these services, acting as the intermediary between the server hardware and the clients requesting services.

Types of Server Applications

Server applications can be broadly categorized based on the services they provide.

- Web Servers: Web servers are responsible for delivering web pages to users. They handle requests from web browsers, retrieve the requested files from the server’s storage, and send them back to the client. Popular web server applications include Apache HTTP Server, Nginx, and Microsoft IIS.

- Database Servers: Database servers store and manage large amounts of data, making it accessible to various applications and users. They provide functionalities like data storage, retrieval, manipulation, and security. Common database server applications include MySQL, PostgreSQL, Oracle Database, and Microsoft SQL Server.

- Email Servers: Email servers handle the sending, receiving, and storage of emails. They receive emails from clients, store them, and deliver them to the intended recipients. Popular email server applications include Postfix, Sendmail, and Microsoft Exchange Server.

- File Servers: File servers store and manage files, making them accessible to multiple users on a network. They provide functionalities like file sharing, storage, and backup. Examples include Samba, NetApp, and Windows Server.

- Application Servers: Application servers provide a platform for running and managing applications, such as web applications, enterprise applications, and mobile applications. They handle tasks like request processing, data access, and security. Popular application server applications include JBoss, WebSphere, and Tomcat.

Server Application Interactions

Server applications interact with clients and other servers in a variety of ways.

- Client-Server Interaction: Clients, such as web browsers, email clients, or mobile apps, send requests to the server application. The server application processes the request, retrieves the necessary data, and sends a response back to the client.

- Server-Server Interaction: Server applications can also interact with each other to exchange data or services. For example, a web server might request data from a database server to populate a web page or send an email using an email server.

Examples of Server Applications

- Web Server: Apache HTTP Server is widely used for hosting websites, powering many popular websites like WordPress.com and Wikipedia.

- Database Server: MySQL is a popular open-source database server used by many web applications, including WordPress, Drupal, and Joomla.

- Email Server: Postfix is a popular open-source email server used by many organizations for sending and receiving emails.

- File Server: Samba is a popular open-source file server that allows users to share files over a network.

- Application Server: Tomcat is a popular open-source application server used for running Java web applications.

Server Architecture

Server architecture refers to the design and structure of a server system, encompassing its hardware, software, and network components. The choice of server architecture depends on factors such as the application’s requirements, scalability needs, and budget constraints.

Single-Server Architecture

Single-server architecture is the simplest form, involving a single physical server handling all the workload. This architecture is suitable for small businesses or applications with limited user traffic and data storage needs.

- Advantages: Easy to manage, cost-effective for small deployments, straightforward setup and configuration.

- Disadvantages: Limited scalability, single point of failure, performance bottlenecks under heavy load.

- Example: A small website hosted on a single server with limited traffic and data storage requirements.

Clustered Server Architecture

Clustered server architecture involves multiple servers working together as a single unit. This architecture enhances reliability and scalability by distributing workload across multiple servers.

- Advantages: High availability, improved performance, scalability through adding more servers.

- Disadvantages: Increased complexity, higher initial investment, potential for configuration issues.

- Example: A large e-commerce website with high traffic and data storage requirements, where multiple servers are used to handle the load and ensure continuous availability.

Cloud-Based Server Architecture

Cloud-based server architecture leverages cloud computing services to host and manage servers. This architecture offers flexibility, scalability, and cost-effectiveness, allowing users to access resources on demand.

- Advantages: On-demand scalability, pay-as-you-go pricing, reduced infrastructure costs, high availability and redundancy.

- Disadvantages: Potential security concerns, dependency on third-party providers, limited control over underlying infrastructure.

- Example: A startup company using cloud services to host their website and applications, allowing them to scale resources based on demand and avoid upfront capital expenditure on hardware.

Server Architecture Design for Scalability and Reliability

Server architecture can be designed for scalability and reliability through various techniques:

- Load Balancing: Distributing incoming traffic across multiple servers to prevent overload on any single server.

- Redundancy: Implementing backup servers to ensure continuous operation even if one server fails.

- Scalable Storage: Using storage systems that can easily expand to accommodate increasing data volumes.

- Modular Design: Building servers with components that can be easily upgraded or replaced.

Server Deployment

Server deployment is the process of installing, configuring, and making a server operational. This process involves careful planning, hardware and software selection, and security measures to ensure the server functions efficiently and securely.

Planning and Design

Planning and design are crucial for successful server deployment. This stage involves defining the server’s purpose, determining the required resources, and outlining the deployment strategy.

- Define Server Purpose: Clearly identify the server’s primary function. This could be hosting websites, running applications, storing data, or managing network resources. This clarity guides the selection of hardware, software, and security measures.

- Determine Resource Requirements: Assess the server’s workload and anticipate future growth. This includes determining the required processing power, memory, storage space, and network bandwidth. Overestimating these requirements ensures the server can handle future demands.

- Deployment Strategy: Choose a deployment model, such as physical, virtual, or cloud-based. This choice influences the hardware, software, and security considerations. Physical servers offer greater control, while virtual and cloud-based deployments provide flexibility and scalability.

Hardware and Software Installation

After planning, the next step involves installing the necessary hardware and software. This stage requires meticulous attention to detail and adherence to best practices.

- Hardware Installation: This step involves assembling the server components, including the motherboard, CPU, RAM, storage drives, and network interface cards. The process involves connecting these components correctly and ensuring they are securely mounted in the server chassis.

- Operating System Installation: Install the chosen server operating system, such as Windows Server, Linux, or macOS Server. This step involves booting the server from a bootable media (DVD or USB drive) and following the on-screen instructions to install the operating system.

- Software Installation: Install any necessary software, such as databases, web servers, application servers, and security tools. This step involves downloading the software, running the installation program, and configuring the software according to the server’s purpose.

Configuration and Testing

Once the hardware and software are installed, the server needs to be configured and thoroughly tested. This stage ensures the server operates as intended and meets the performance requirements.

- Server Configuration: Configure the server’s network settings, user accounts, security policies, and other settings. This step involves accessing the server’s administrative interface and modifying the settings according to the server’s purpose and security requirements.

- Performance Testing: Test the server’s performance under various workloads. This involves simulating real-world scenarios and measuring the server’s response times, resource utilization, and overall stability.

- Stress Testing: Stress test the server to identify potential bottlenecks and performance issues. This involves subjecting the server to extreme workloads to assess its resilience and identify areas for improvement.

Security Measures

Security is paramount in server deployment. Implementing robust security measures protects the server and its data from unauthorized access, malware, and other threats.

- Firewall Configuration: Configure the server’s firewall to block unauthorized network traffic. This involves creating firewall rules that allow only authorized traffic to access the server.

- Password Management: Implement strong password policies and enforce regular password changes. This involves using complex passwords, avoiding common phrases, and employing password managers to securely store and manage passwords.

- Regular Security Updates: Install security updates promptly to patch vulnerabilities and protect the server from known exploits. This involves regularly checking for updates, downloading and installing them, and rebooting the server to apply the updates.

Server Trends

The server landscape is constantly evolving, driven by technological advancements and changing user demands. Emerging trends are reshaping how servers are designed, deployed, and managed. Here’s a look at some of the most significant trends and their impact.

Cloud Computing

Cloud computing is the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet (“the cloud”). This enables organizations to access and use computing resources on demand, without the need for direct management of the underlying infrastructure. Cloud computing has become a dominant force in the server market, offering numerous benefits.

- Scalability and Flexibility: Cloud computing provides on-demand scalability, allowing businesses to adjust their computing resources quickly and easily based on their needs. This eliminates the need for upfront investments in hardware and allows for greater flexibility in resource allocation.

- Cost Efficiency: Pay-as-you-go pricing models in cloud computing can significantly reduce IT costs compared to traditional on-premises server infrastructure. Organizations only pay for the resources they use, eliminating the need for large capital expenditures.

- Accessibility and Collaboration: Cloud computing enables remote access to servers and applications, allowing teams to collaborate seamlessly from anywhere with an internet connection.

Edge Computing, Server computer

Edge computing brings computation and data storage closer to the source of data generation, such as user devices, sensors, and IoT gateways. This reduces latency, improves responsiveness, and enhances data privacy.

- Reduced Latency: By processing data closer to its source, edge computing minimizes the time it takes for data to travel to a centralized data center, resulting in faster response times for applications and services.

- Improved Responsiveness: Edge computing allows for real-time data analysis and decision-making, enabling applications to respond quickly to changing conditions and user interactions.

- Enhanced Data Privacy: Processing data at the edge reduces the need to transmit sensitive information to centralized data centers, improving data privacy and security.

Serverless Computing

Serverless computing allows developers to run code without managing servers. Instead of provisioning and maintaining servers, developers focus on writing and deploying code, while the cloud provider handles all the underlying infrastructure and scaling.

- Simplified Development: Serverless computing eliminates the need for developers to worry about server management tasks, allowing them to focus on building applications.

- Automatic Scaling: Serverless platforms automatically scale resources based on demand, ensuring applications can handle fluctuations in workload without manual intervention.

- Cost Optimization: Pay-per-execution pricing models in serverless computing allow developers to pay only for the resources they consume, resulting in cost savings compared to traditional server infrastructure.

Artificial Intelligence (AI)

AI is transforming server technology by driving demand for powerful hardware and software capable of handling complex AI workloads. AI-powered applications require significant processing power, memory, and storage capacity, leading to the development of specialized server architectures optimized for AI tasks.

- Specialized Hardware: AI workloads often require specialized hardware, such as GPUs and AI accelerators, to handle the intensive computations involved in training and running AI models.

- Optimized Software: Software frameworks and libraries specifically designed for AI workloads are being developed to simplify AI development and deployment.

- Data Management: AI applications rely on vast amounts of data for training and inference. Server technologies are evolving to handle the storage, processing, and management of large datasets.

Final Review

Understanding server computers is essential for anyone interested in the inner workings of the internet and the technology that drives our digital world. Whether you’re a tech enthusiast, a business owner, or simply curious about the infrastructure that powers our daily lives, exploring the world of servers offers a fascinating glimpse into the heart of modern technology.

Server computers are powerful machines designed to handle numerous tasks and requests simultaneously. While most people encounter servers in the context of websites and online services, a growing number of individuals are setting up home servers to manage their personal data, media libraries, and even act as a central hub for their smart home devices.

These home servers offer greater control and flexibility compared to cloud-based solutions, allowing users to customize their own infrastructure and enjoy the benefits of a dedicated server for their personal needs.